The title of my MA thesis is “Musical Mapping of Two-Dimensional Touch-Based Interfaces”. Phew. And then the stated goal of the thesis is to automate the mapping process for two-dimensional music interfaces. What does that mean?

OK, so let’s say you have an iPad app. And your app lets you make layouts of buttons to make music with – BeatSurfing, for example, or TouchOSC. And you have some giant grid of buttons: 12 x 12 or so. Wouldn’t it be nice if there was code that assign notes (or ‘musical events’, etc) to each button for you, instead of you meticulously assigning each button to a note? That’s what “to automate the mapping process for two-dimensional music interfaces” means.

You can’t automate a thing unless you understand how it works. Most of the thesis, then, is me trying to figure out how people deal with musical controls and musical representations that exist in two dimensions. Which meant that I needed to look at musical scores, and at touchscreen apps – everything from Stockhausen scores to cat piano apps.

It turns out that most of these constructs have some recurring features. In Western music notation, for example, pitch increases as you move further up the staff. This is the same way that pitch works in sequencers, and instruments like dulcimers, for example.

The question then becomes: are there more trends like this? (spoiler: yes) And if so, what are they? And then: can we automate the process of recognizing these constructs and applying the appropriate musical features to them?

Step one is to find all of these trends. To do that, I first looked at a lot (~375) of iOS music apps in pretty gory detail: I downloaded lots of them, I watched a lot of videos, and so on. I did some classification of apps, I made lists of how their mappings work, and I wrote a paper about it.

Then, I looked at every single iOS music app, in very high level detail: just the descriptions and screenshots (Yes, this was crazy. Yes, I got hideous repetitive stress injuries). I made more classifications, I made more lists of mappings, I made more summaries. The paper for that one is here, and the associated dataset is here.

After that, for a total change of pace, I looked at 16 important graphic scores – Stockhausen, Brown, Cage, etc. And then, I, wait for it, made lists of mappings, and summarized mappings. Crazy, I know. That chunk was not accepted as a paper anywhere, but it is Chapter 4 of the thesis.

Before we go on, there are some things that I did not do. I did not ask any humans about this. I did no user studies, I didn’t approach things from a psychological, experimental viewpoint. Doing so is totally valid! But it is not what I did – I went with a raw data / let’s-see-what-is-out-there sort of methodology.

And what is out there? Basically, pitch increases from left to right, usually quantized to some convenient, happy scale. If pitch does not increase from left to right, it increases from top to bottom. Volume and timbre increase vertically. Time moves from left to right, except when pitch does, in which case it moves from top to bottom. This is not exciting stuff.

The exciting stuff, when it does come, comes in small things away from the center. Borderlands is a gorgeous, granular synth iOS app; Hans-Christoph Steiner’s Solitude is an amazing score, all done in Pure Data. Halim El-Dabh writes improvisations based on ancient Egyptian colour notation. There are gamelan apps that do multi-touch timbre. And so on.

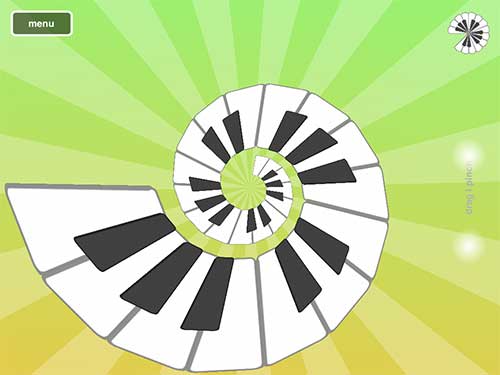

I did, however, find enough data in those three reviews to do step two, which was some meta-summarizing. In Chapter 5, I defined a bunch of abstractions of control layout (albeit for relatively unexciting control layouts). That is to say, what is the minimum requirement for 12 buttons to be considered a piano? Is it color or shape or layout? (spoiler: layout) I did this for 14 things, from pianos to staff notation to mixing consoles, and also talked about how they map things. Based on this, I could actually do step three: build some tools to map some things.

Chapter 6 is the engineering: I wrote an API that can recognize and automatically map most of the abstractions listed in Chapter 5. It’s an open, HTTP API – the documentation is here. I also made a demo webapp to show it off – you’ll need Chrome or Safari to make it work. There is also now, finally, an iOS app! You can find it here.

Phew. That is the thesis. You can read the whole thing here – I hope it has some magic for you.

Thanks for reading!